AirSketch: Maya

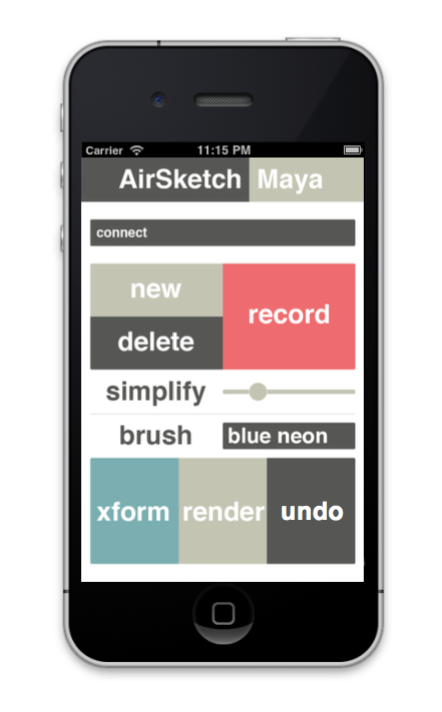

AirSketch: Maya is a prototype iOS app which turns your iPhone into a true 3D gestural input tool for Autodesk Maya.

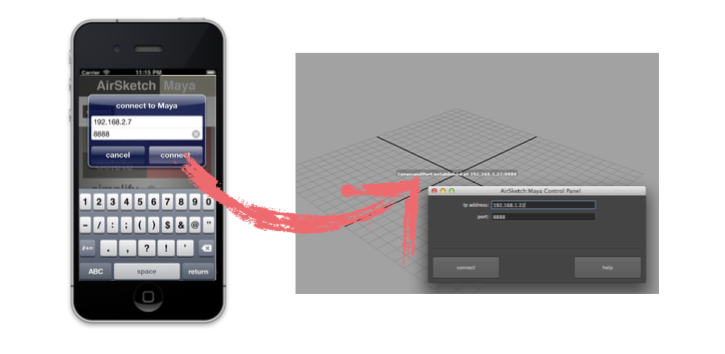

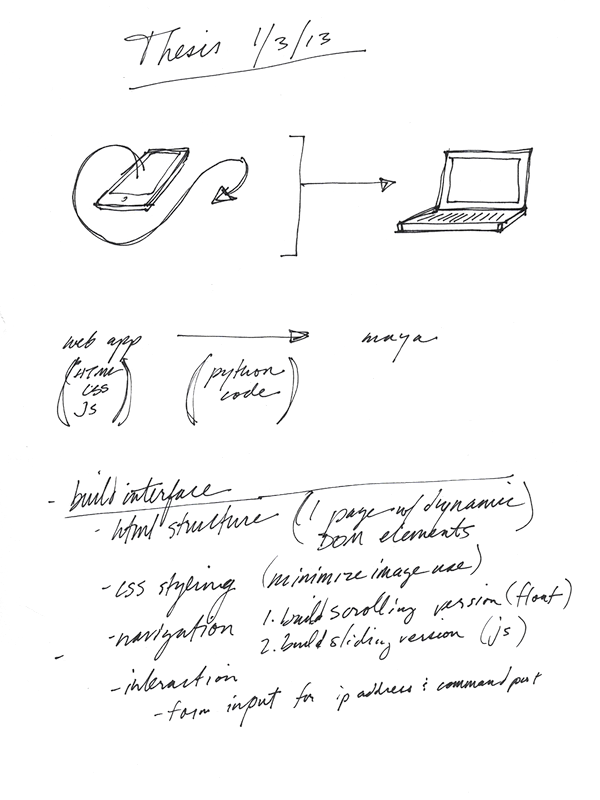

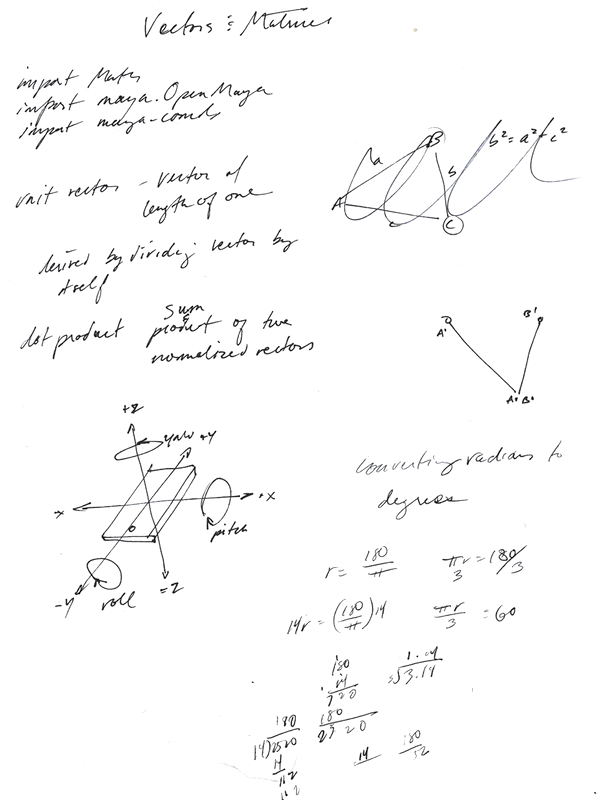

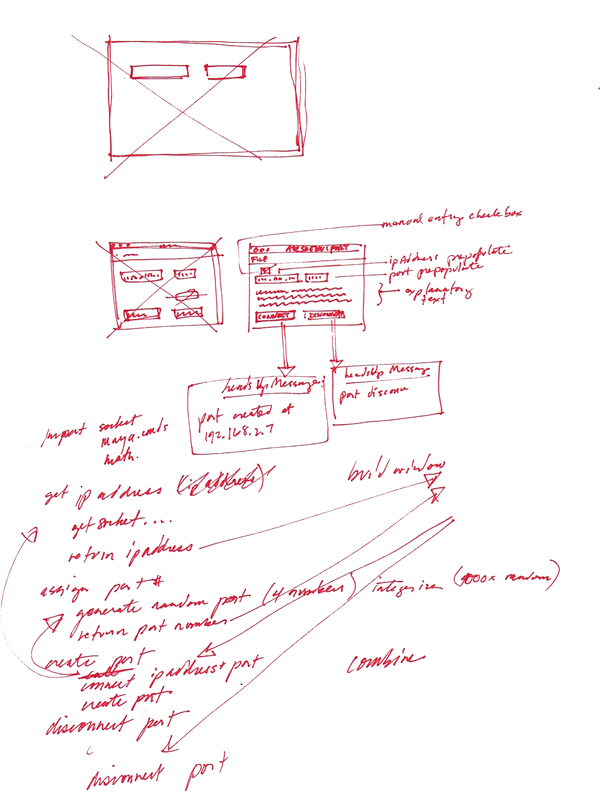

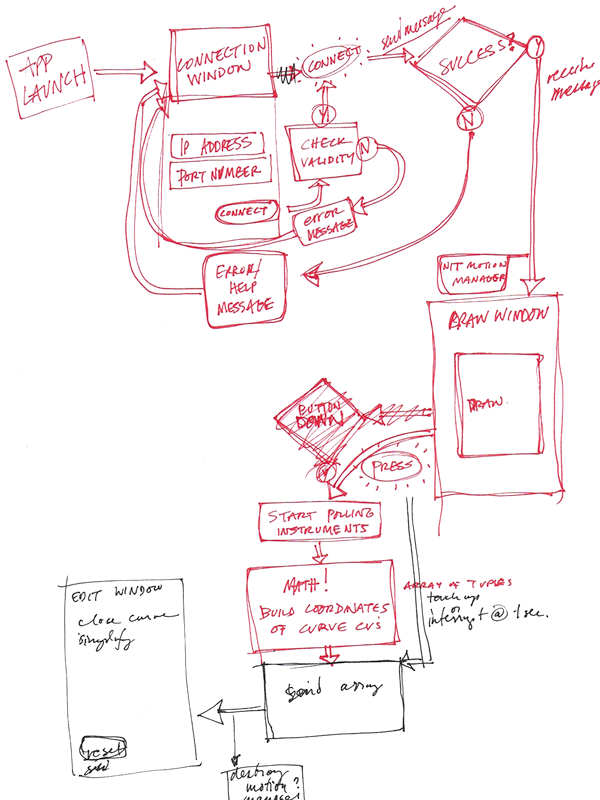

AirSketch works by reading the device’s gyroscope and accelerometer sensor data and sending that wirelessly to the 3D modeling and animation software Maya. Maya then translates this into a true 3D stroke, which can be rendered out in a variety of ways, all from the simple interface of the mobile app.

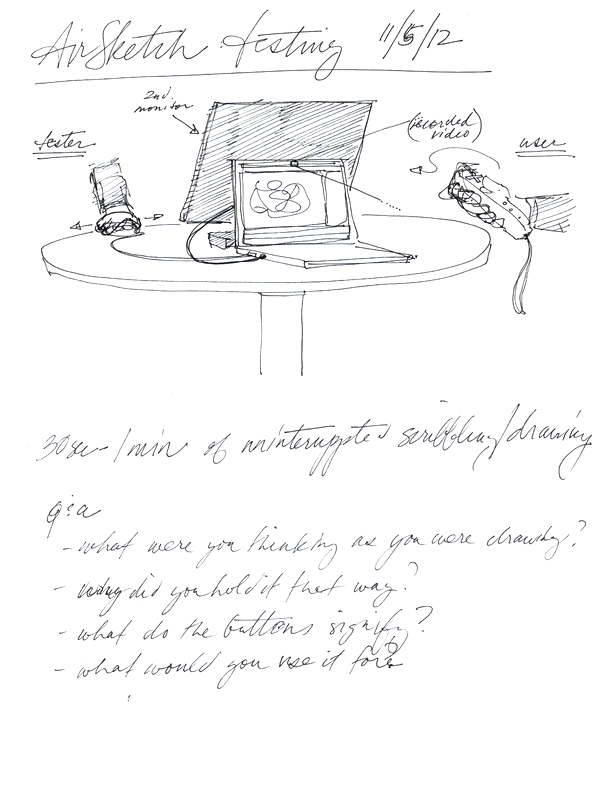

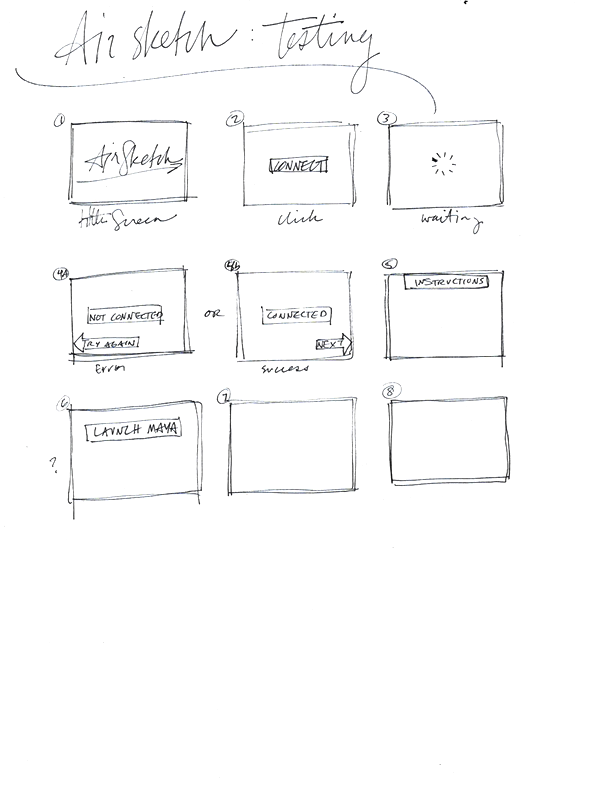

AirSketch was born out of a need to find new ways to generate imagery and bridge the gap between gesture drawing and 3D modeling and animation. Initially conceived with the intention of using a Nintendo Wiimote as the input device, the lack of drivers necessitated a change of course to iOS development. The final product is a mix of Objective-C (iOS) and Python scripting (Maya). There was a significant amount of research and testing involved in the creation of AirSketch – not only in regards to programming and network communication (which I had to teach myself), but also the design and branding.

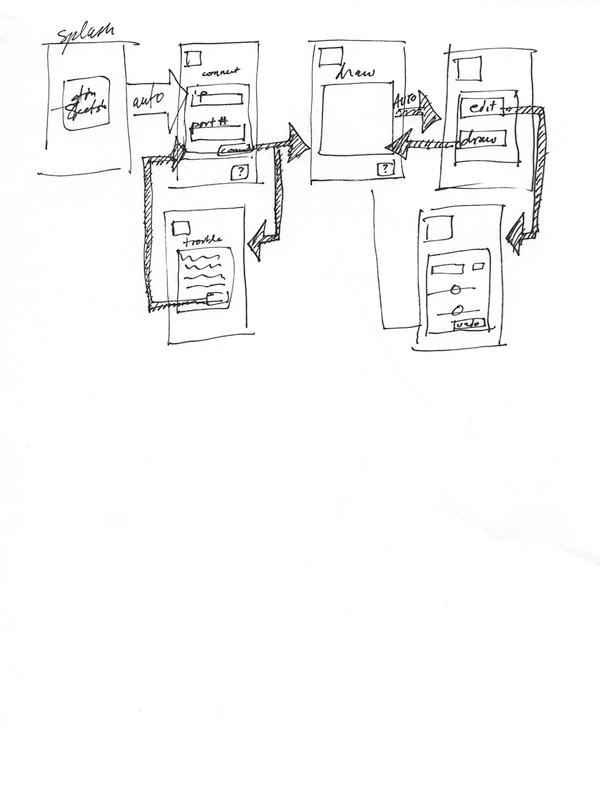

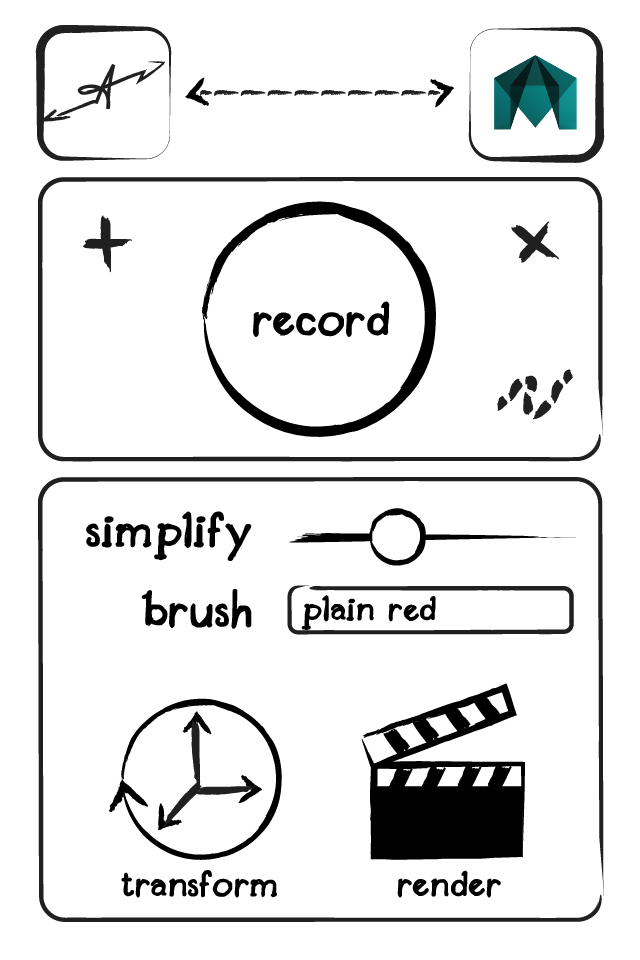

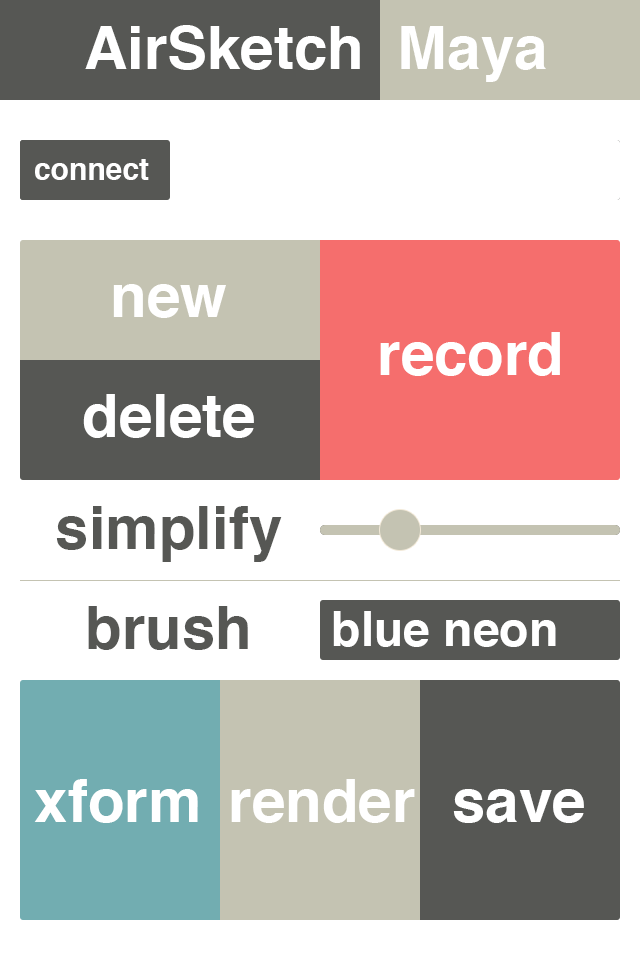

The interface is simple but extremely powerful. Through a single screen, the user can connect to their desktop over a wireless connection and record, delete, and simplify strokes. They can also change the brush type (black ink or blue neon, for example), move the stroke, render, and save the scene.

Although the design is flat and boxy, the buttons do have a subtle 3-pixel rounded bevel on the corners. An animated overlay prevents the user from accidentally pressing a button that is not available at that particular time. The color, shape, and size of the buttons also work to indicate if not their specific function, then at least their sequence and import.

I created two short animations to demonstrate AirSketch:

Upon graduation, I decided to release the source code to the public for further development. You can download it at my GitHub repository